At this year’s CES, Qualcomm showcased the Snapdragon Ride Flex platform and hosted demonstration vehicle rides, highlighting the applications for the scalable multi-SoCs technology.

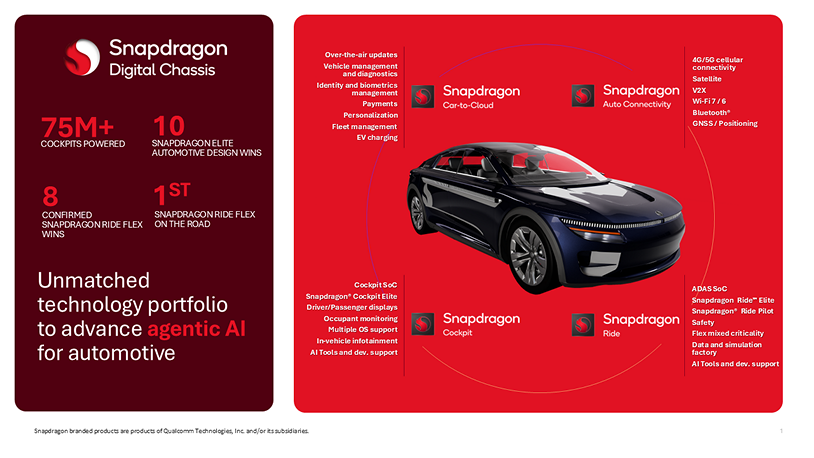

The Snapdragon Ride Flex platform is described as a collection of System-on-Chips (SoCs) that function together acting as a scalable central compute solution for today’s vehicles. The platform can run mixed-criticality workloads such as both the digital cockpit infotainment systems as well as Advanced Driver Assistance Systems (ADAS), and automated driving functions, on a single piece of hardware.

Discover B2B Marketing That Performs

Combine business intelligence and editorial excellence to reach engaged professionals across 36 leading media platforms.

Due to its scalable nature, it is claimed the platform offers performance levels from entry to L4 autonomous driving capabilities, allowing OEMs to scale features and functions through software updates. By using one SoC, the Ride Flex platform offers a cost saving benefit, Qualcomm says, allowing OEMs to reduce the overall price of materials, streamline workflows and simplify system design.

During CES held in Las Vegas, Frankie Youd was able to take part in a live demonstration, hosted by Qualcomm, showcasing Snapdragon Ride Flex. Frankie spoke to Nilesh Parekh, senior director, product management Snapdragon Ride Flex, to learn more about the system and what it can achieve within a vehicle.

Just Auto (JA): Could you explain the Ride Flex system and how it works?

Nilesh Parekh (NP): We have the Snapdragon SA8775P, which is commercially sampling now, hosting both our ADAS functions and cockpit functions on a single system-on-chip (SoC). As part of that, we have our Snapdragon guide assist stack. That stack is equipped with one video, one radar tech sensor set to provide regulatory and level 2 ADAS functions.

The cockpit has a user-based experience. It has navigation, it also has Google AAA voice assistant that gives you more than voice assistance with AI – it understands context. The digital cluster also has visualisation of objects which are detected on the road such as markings and signs.

Ride Flex includes several SoCs; we have entry tier, mid-tier, and premium tier. The premium tier is going to launch in the second half of the year based on the Snapdragon ranked elite product.

What technology can be seen within the vehicle?

Within the vehicle we have a driver monitoring system. As you drive, the ADAS view will become active, and it will also start monitoring the driver’s face and making sure that they are looking forward; if there is any distraction, it’ll do visual and audio notifications. It can also detect actions such as if you are holding a coffee cup or a mobile phone.

The technology can detect pedestrians, cyclists, motorcyclist, the road markings, the road size, and the speed limit. The human machine interface was designed in-house by Qualcomm. We also have a collaboration with Unreal engine.

One driver assist feature that it can achieve is lane keeping. The vehicle is able to stay in its lane and maintain a set speed in the lane and this will all happen on the same SoC. When the Ride Assist system is activated, it will keep the vehicle centred in the lane and keep you a safe distance from the vehicle in front.

We describe it as collaborative driving. If you were to turn an indicator on, the vehicle would give you freedom to change lane, but as soon as you disable the indicator, the system engages again.

The vehicle also has Google AAA. This is the Google AI assistant that we have in collaboration with Google, and we have integrated into this platform. You can ask it general queries such as what the weather is like today, to more complex questions such as what the latest movie is from the Avatar series.

The beauty is, it’s all working on a single platform.

While you are driving you can have music playing, so you have the infotainment system on, you have the ADAS all running; the beauty is, it’s all working on a single platform.

The idea is that you can offer more than ADAS features on the cockpit, and you have one electronic control unit (ECU) doing everything, rather than adding two ECUs.

What are the benefits of the software working on one system-on-chip (SoC)?

The ADAS workloads and the cockpit workloads run from a single SoC. The main benefit, of course, is consolidation of ECUs which results in cost benefit to the OEMs. At the same time, when you do that consolidation, you can bring the functions on the same SoC, same memory space. It also reduces latency when you are passing the camera frames from the ADAS system to the cockpit system for visualisation.

For an augmented reality hub, for example, you benefit when the cameras are on the same SoC, where you are doing the augmentation, and for when you visualise the objects that you are detecting on the road.

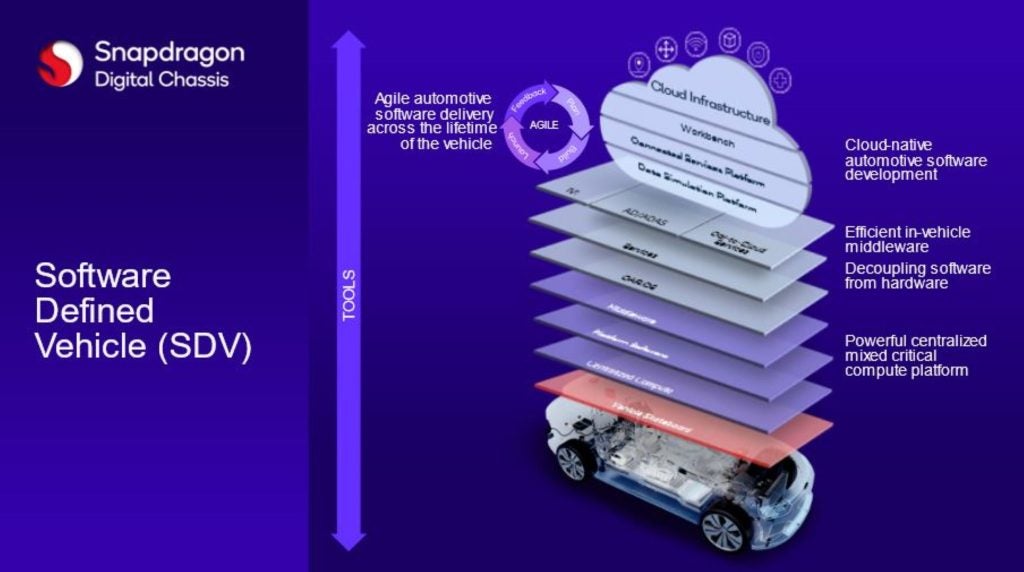

When you have a single SoC, you have a single software baseline; that allows you to implement your software defined vehicle much more efficiently, because now you have a single software baseline to maintain and upgrade during the lifetime of the product.

What are some of the challenges this technology presents?

I think the key challenge of such a system is to host mixed criticality workloads on a single SoC, on a single software baseline. You need to implement mechanisms, basically quality of service, mechanisms that allows you to have this coexistence.

The higher priority safety critical workloads get resources ahead of the lower priority resources, or workloads. That’s really the key area of innovation, that’s where the innovation is, and there are a lot of details to it in terms of the hardware level, quality of service, the software mechanisms to exercise that quality of service, especially for the common resources.

Does the vehicle need Internet to allow the AI assistant to function?

This is a hybrid architecture, where some of the tasks can be done locally. The AI does not have to access The Cloud all the time. With the collaboration we have with Google some of the tasks can be done locally.

Your connection may not be available all the time, and if you need some specific information such as asking the assistant how to roll down the windows, you don’t need to access The Cloud for that data.